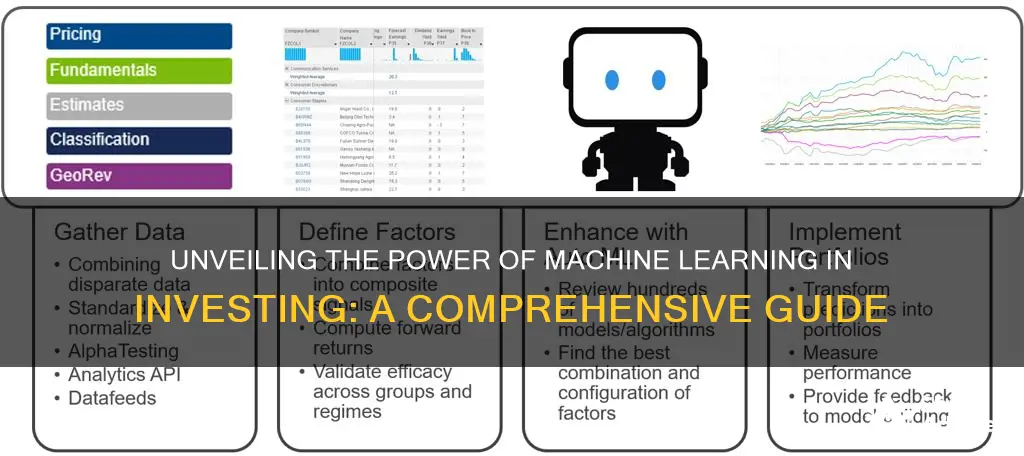

Investing with machine learning is a powerful approach that leverages advanced algorithms and data-driven insights to optimize investment strategies. By analyzing vast amounts of financial data, machine learning models can identify patterns, make predictions, and generate investment recommendations with a level of precision and speed that traditional methods often struggle to match. This technology can process historical market trends, news sentiment, and even real-time market data to inform investment decisions, potentially leading to more informed and timely trades. The process involves training models on historical data, refining them over time, and using these models to make predictions about future market movements, thus enabling investors to make more strategic and potentially profitable choices.

What You'll Learn

- Data Collection: ML algorithms require vast datasets for training and prediction

- Feature Engineering: Selecting and transforming raw data into meaningful features for models

- Model Selection: Choosing appropriate ML models based on task and data characteristics

- Training & Optimization: Techniques to train models, minimize errors, and improve performance

- Risk Management: Strategies to mitigate risks associated with ML-driven investment decisions

Data Collection: ML algorithms require vast datasets for training and prediction

Machine learning algorithms, the backbone of automated investing, rely heavily on data collection as a fundamental process. These algorithms are designed to learn and make predictions or decisions based on patterns and insights derived from data. The quality and quantity of data available directly impact the performance and accuracy of these models.

In the context of investing, data collection involves gathering historical and real-time financial data, including stock prices, market trends, economic indicators, company financial statements, and news sentiment. The more comprehensive and diverse the dataset, the better the machine learning models can understand and predict market behavior. For instance, a model trained on a dataset covering a wide range of companies, industries, and market conditions will likely perform better in making investment recommendations compared to one trained on a limited set of data.

The process of data collection for machine learning in investing is meticulous and often involves multiple sources. It includes scraping financial websites, APIs from stock exchanges, and financial news platforms. Data cleaning and preprocessing are essential steps to ensure the data is consistent, accurate, and relevant. This process might involve handling missing values, removing outliers, and transforming data into a format suitable for machine learning algorithms.

Additionally, the volume of data is crucial. Machine learning algorithms, especially deep learning models, require large datasets to generalize and make accurate predictions. With more data, the models can learn complex patterns and relationships, improving their predictive capabilities. For instance, a model predicting stock prices might require historical data for thousands or millions of stocks to capture market dynamics effectively.

In summary, data collection is a critical phase in developing machine learning models for investing. It involves gathering diverse and extensive financial data, ensuring its quality, and preparing it for model training. The success of these models heavily relies on the availability and quality of data, making data collection a cornerstone of the machine learning-driven investing process.

The Prince of Wales: A Future Investiture?

You may want to see also

Feature Engineering: Selecting and transforming raw data into meaningful features for models

Feature engineering is a crucial step in the process of building predictive models for investment strategies. It involves transforming raw data into a format that can be effectively used by machine learning algorithms to make accurate predictions. This process requires a deep understanding of the data and the problem domain, as well as creativity and domain expertise to identify the most relevant features.

The goal of feature engineering is to extract meaningful information from the raw data that can help the model learn patterns and make predictions. This is achieved by selecting and creating features that are highly correlated with the target variable, which is the variable the model aims to predict. For example, in an investment context, the target variable could be the stock price, and features might include historical stock prices, financial ratios, economic indicators, and market sentiment.

One common approach to feature engineering is to start with a large set of raw data and then apply various techniques to create new features. This can include statistical transformations, such as calculating moving averages or standard deviations, to derive new variables that capture different aspects of the data. For instance, you might calculate the difference between the current stock price and the previous day's closing price to identify trends or volatility. Another technique is to use domain knowledge to create features that are specific to the investment domain. For example, financial analysts might create features like the price-to-earnings ratio or the debt-to-equity ratio, which provide insights into a company's financial health and performance.

Feature selection is another critical aspect of feature engineering. It involves choosing the most relevant features from the engineered set to include in the model. This step is important because including irrelevant or redundant features can lead to overfitting, where the model performs well on the training data but poorly on new, unseen data. Techniques such as correlation analysis, feature importance ranking, and recursive feature elimination can be used to identify the most informative features. For instance, correlation analysis can help determine which features are strongly related to the target variable, while feature importance ranking methods, like SHAP or LIME, can provide insights into the contribution of each feature to the model's predictions.

Additionally, feature engineering often involves handling missing data and dealing with outliers. Missing values can be imputed using techniques like mean imputation or regression imputation, ensuring that the model has complete data for training. Outliers, which are data points that deviate significantly from the rest of the data, can be addressed by either removing them or transforming them to bring them closer to the main cluster of data points. These steps are essential to ensure the quality and reliability of the feature set used for training the machine learning models.

In summary, feature engineering is a complex and iterative process that requires a combination of statistical knowledge, domain expertise, and creativity. It involves transforming raw data into a format that captures the underlying patterns and relationships, while also ensuring that the selected features are relevant and informative for the investment prediction task at hand. By carefully engineering features, investors can improve the performance and generalization ability of machine learning models, leading to more accurate investment decisions.

Investments: Best Bets for Today

You may want to see also

Model Selection: Choosing appropriate ML models based on task and data characteristics

Model selection is a critical step in the process of building a successful machine learning (ML) model for investment strategies. The choice of model depends heavily on the specific task at hand and the characteristics of the data being used. Here's an overview of how to approach model selection in the context of investing with ML:

Understanding the Task: Begin by clearly defining the investment problem you are trying to solve. Are you predicting stock prices, identifying profitable trading signals, or classifying investment opportunities? For example, if the task is to predict stock prices, you might consider models like Linear Regression or Recurrent Neural Networks (RNNs) to capture temporal dependencies. Understanding the nature of the task will guide your model selection.

Data Characteristics: The features and structure of your data play a significant role in model choice. For instance, if your dataset contains time-series data, models like ARIMA (AutoRegressive Integrated Moving Average) or Long Short-Term Memory (LSTM) networks can effectively capture patterns over time. If the data is highly dimensional and contains complex relationships, consider using ensemble methods like Random Forest or Gradient Boosting Machines, which can handle non-linear interactions. Additionally, consider the size of your dataset; small datasets might benefit from simpler models, while larger datasets can accommodate more complex architectures.

Model Complexity and Regularization: The complexity of the model should be carefully considered. More complex models, such as deep neural networks, can capture intricate patterns but may also lead to overfitting, especially with limited data. Regularization techniques like L1 or L2 regularization, dropout, or early stopping can help control overfitting. For instance, in a small-scale investment prediction model, a simpler model might be preferred to avoid overfitting to the training data.

Evaluation and Validation: After selecting a few potential models, it's crucial to evaluate and validate them using appropriate metrics. For regression tasks, Mean Squared Error (MSE) or R-squared can be used. For classification, accuracy, precision, recall, or F1-score might be more suitable. Cross-validation techniques can ensure that the model generalizes well to unseen data.

Domain Knowledge and Experimentation: Incorporating domain expertise is essential. Financial experts can provide insights into the investment landscape, helping to refine feature engineering and model selection. Experimentation is key; try different models, hyperparameters, and feature sets to find the best-performing combination. This iterative process allows for the refinement of the model and its ability to make accurate investment predictions.

Will" de Inversión: Una Guía para Principiantes en Españo

You may want to see also

Training & Optimization: Techniques to train models, minimize errors, and improve performance

Training and optimizing machine learning models for investment strategies is a complex process that involves several key techniques to ensure accurate predictions and robust performance. Here's an overview of the training and optimization process:

Data Preparation and Feature Engineering: The foundation of any machine learning model is high-quality data. In the context of investing, this involves gathering historical financial data, including stock prices, market indices, economic indicators, and any other relevant factors. The data is then preprocessed to handle missing values, outliers, and inconsistencies. Feature engineering is a crucial step where domain knowledge is applied to create meaningful features that capture market dynamics. For example, technical indicators like moving averages, relative strength index (RSI), or momentum measures can be derived from price data. These engineered features provide the model with a more comprehensive understanding of the market, improving its predictive capabilities.

Model Selection and Training: Choosing an appropriate model architecture is essential. Common choices include supervised learning algorithms such as linear regression, decision trees, random forests, and neural networks. For investment applications, deep learning models like recurrent neural networks (RNNs) or convolutional neural networks (CNNs) are often employed due to their ability to handle sequential data and capture complex patterns. The training process involves feeding the preprocessed data into the model, adjusting its parameters through an optimization algorithm (e.g., gradient descent), and iteratively improving its performance. Cross-validation techniques are used to assess the model's generalization ability and prevent overfitting, ensuring it performs well on unseen data.

Regularization and Error Minimization: Overfitting is a critical issue in machine learning, where a model learns the training data too closely and fails to generalize to new, unseen data. Regularization techniques are employed to mitigate this. L1 and L2 regularization, dropout, and early stopping are some methods used to control model complexity and prevent overfitting. During training, the model's performance is evaluated using various error metrics such as mean squared error (MSE), mean absolute error (MAE), or binary cross-entropy, depending on the problem type. These metrics guide the optimization process, helping to adjust the model's parameters to minimize errors.

Hyperparameter Tuning: Hyperparameters are settings that are not learned by the model but are set before the training process. These include learning rate, number of layers in a neural network, regularization strength, and more. Grid search or random search algorithms are used to systematically explore the hyperparameter space and find the best combination that optimizes model performance. This step significantly impacts the model's ability to make accurate predictions and is crucial for fine-tuning the investment strategy.

Ensemble Methods and Model Combination: Ensemble techniques combine multiple models to make predictions, leveraging the strengths of different algorithms. By averaging or stacking predictions from various models, ensemble methods can improve accuracy and robustness. This approach is particularly useful in investment scenarios where multiple models can capture different aspects of market behavior, leading to more reliable forecasts.

Invest Wisely: Strategies for Success

You may want to see also

Risk Management: Strategies to mitigate risks associated with ML-driven investment decisions

Machine learning (ML) has revolutionized the investment landscape, offering powerful tools to analyze vast amounts of data and make informed decisions. However, the very nature of ML-driven investments also introduces unique risks that investors must carefully navigate. Here's an exploration of risk management strategies tailored to the ML investment domain:

Data Quality and Bias:

The foundation of ML-driven investments lies in data. Risks arise when the quality and integrity of this data are compromised. Inaccurate, incomplete, or biased data can lead to flawed models and suboptimal investment choices. To mitigate this, investors should:

- Emphasize Data Governance: Implement robust data governance practices, including data cleansing, validation, and ongoing monitoring for quality.

- Diversify Data Sources: Rely on multiple, independent data sources to cross-validate information and identify potential biases.

- Regular Model Auditing: Periodically audit ML models for bias, fairness, and accuracy, ensuring they remain reliable and ethical.

Model Reliability and Explainability:

ML models, while powerful, can be complex and "black box-like," making it challenging to understand their decision-making processes. This lack of transparency poses risks, especially in regulatory environments demanding explainability.

- Interpretability Techniques: Employ techniques like LIME (Local Interpretable Model-agnostic Explanations) or SHAP (SHapley Additive exPlanations) to provide insights into model predictions.

- Human-in-the-Loop: Incorporate human expertise to review and validate model outputs, ensuring decisions are well-informed and aligned with investment goals.

- Model Testing and Validation: Rigorously test models on diverse datasets and scenarios to assess their robustness and reliability.

Market and Algorithmic Risks:

ML models are trained on historical data, which may not always accurately represent future market conditions. Additionally, algorithmic errors or unexpected market events can impact investment outcomes.

- Stress Testing and Scenario Analysis: Conduct stress tests and scenario analyses to evaluate model performance under extreme market conditions.

- Redundancy and Fail-Safe Mechanisms: Implement redundant systems and fail-safe mechanisms to prevent catastrophic losses in case of model failures or market disruptions.

- Continuous Monitoring and Adaptation: Regularly monitor market trends and adjust ML models accordingly to ensure they remain relevant and effective.

Regulatory and Ethical Considerations:

As ML in investments gains traction, regulatory scrutiny and ethical concerns are paramount.

- Compliance with Regulations: Stay abreast of evolving regulations governing ML in finance and ensure compliance through robust governance frameworks.

- Ethical AI Practices: Adhere to ethical guidelines for AI development, promoting fairness, transparency, and accountability in investment processes.

- Transparency and Communication: Foster open communication with investors, clearly explaining the role of ML and the associated risks.

Risk Mitigation through Human Oversight:

While ML enhances decision-making, human oversight remains crucial.

- Human-Led Investment Committees: Establish committees comprising experts in finance, data science, and risk management to review ML-driven investment recommendations.

- Regular Review and Adjustment: Schedule periodic reviews of investment portfolios, comparing ML-driven decisions with human insights and making adjustments as necessary.

- Continuous Learning and Adaptation: Encourage a culture of continuous learning and adaptation, where both humans and ML models evolve together, incorporating new data and insights.

Home Sweet Home Equity: Unraveling the Myth of Property as a Financial Asset

You may want to see also

Frequently asked questions

Machine learning algorithms can analyze vast amounts of data, including historical prices, financial news, social media sentiment, and company fundamentals. By identifying patterns and correlations that traditional methods might miss, these algorithms can generate insights to inform investment decisions. For example, natural language processing can analyze news articles to gauge market sentiment, while clustering algorithms can group similar companies for portfolio diversification.

While machine learning models can make predictions, accurately forecasting stock prices is a complex task. These models learn from historical data and can provide probabilities or ranges for price movements. However, market dynamics are influenced by numerous factors, and past performance is not always indicative of future results. It's essential to use these predictions as part of a broader investment strategy and consider risk management techniques.

One of the main challenges is the potential for overfitting, where a model performs well on historical data but fails to generalize to new, unseen data. Additionally, machine learning models might be susceptible to data biases, leading to inaccurate or unfair predictions. It's crucial to validate and test models rigorously and consider the ethical implications of automated investment decisions. Regular monitoring and human oversight are essential to manage risks effectively.