Linear regression is a statistical technique used to model the relationship between a dependent variable and one or more independent variables. In the context of investments, linear regression is used to predict future stock prices and identify key price points based on historical data. By understanding the relationship between price and time, investors can make informed decisions and improve their trading strategies. The linear regression indicator (LRI) is a valuable tool for investors, providing insights into market trends and helping to identify entry and exit points for trades. While linear regression offers a simple and responsive approach, it is important to combine it with other analytical methods and indicators to make well-rounded investment decisions.

| Characteristics | Values |

|---|---|

| Purpose | Predicting future price movements |

| Variables | Dependent variable (price) and independent variable (time) |

| Data | Historical price movements |

| Prediction | Positive slope indicates an upward trend; negative slope indicates a downward trend |

| LRI calculation | LRI = (Ending Value of Linear Regression Line) / (Number of Bars) |

| LRI use | Detecting trend reversals, support and resistance levels, and price targets |

| LRI integration | Can be combined with other technical indicators for more accurate analysis |

| LRI benefits | Simplicity, trend identification, dynamic support and resistance |

| LRI drawbacks | Lagging indicator, overemphasis on past data, limited scope |

What You'll Learn

Using linear regression to predict future prices

Linear regression is a statistical technique used to model the relationship between a dependent variable and one or more independent variables. In the context of investments, linear regression is a valuable tool for traders and investors to predict future prices based on historical data. By understanding the relationship between price and time, traders can make informed decisions and strategic choices.

The Linear Regression Indicator (LRI) is a formula used to calculate the expected price at the end of a specified number of bars. The formula is: LRI = (Ending Value of Linear Regression Line) / (Number of Bars). A positive slope indicates an upward trend, while a negative slope suggests a downward trend, with the steepness of the slope revealing the strength of the trend.

Traders can adjust the LRI by altering the number of bars analysed. A larger number of bars results in a smoother line, capturing long-term trends, while a smaller number generates a more responsive line, capturing short-term price fluctuations. This flexibility allows traders to customise the LRI according to their specific needs and time horizons.

When using linear regression, it is important to interpret signals accurately. An upward-sloping LRI with the price above the regression line may suggest a bullish trend, while a downward-sloping LRI with the price below the line could indicate a bearish trend. Integrating the LRI with other technical indicators, such as moving averages and the Relative Strength Index (RSI), can improve the accuracy of analysis and provide a more comprehensive understanding of market conditions.

Linear regression is particularly useful for identifying entry and exit points for trades. When the price crosses the regression line, it may signal an opportunity to enter a trade in the direction of the trend. Conversely, when the price crosses the line in the opposite direction, it may be a strategic moment to exit the trade or take profits.

While linear regression offers simplicity and ease of implementation, it is important to acknowledge its limitations. As a lagging indicator, linear regression relies on historical data and may not always accurately predict future price movements, especially during volatile market conditions. Additionally, it may overemphasise past data, which may not reliably predict future market behaviour as conditions can change rapidly. Furthermore, linear regression assumes a linear relationship between price and time, which may not always hold true in the dynamic financial markets.

Despite these limitations, linear regression is a valuable tool when combined with other analytical methods, such as fundamental analysis. By incorporating linear regression into their investment strategies, traders and investors can make more informed decisions and navigate the complex world of financial markets with greater confidence.

Using Zelle With Fidelity Investments: A Comprehensive Guide

You may want to see also

Interpreting linear regression results

Linear regression is a statistical technique used to model linear relationships between variables. It estimates real-number values based on continuous data input. The goal is to calculate an equation for a line that minimises the distance between observed data points and the fitted regression line. This line is then used to predict future values of the dependent variable based on new values of the independent variables.

The linear regression coefficients describe the mathematical relationship between each independent variable and the dependent variable. The p-values for the coefficients indicate whether these relationships are statistically significant.

Interpreting P-Values in Regression for Variables

The p-values in regression help determine whether the relationships observed in your sample also exist in the larger population. The linear regression p-value for each independent variable tests the null hypothesis that the variable has no correlation with the dependent variable. If there is no correlation, there is no association between the changes in the independent variable and the shifts in the dependent variable. In other words, statistical analysis indicates there is insufficient evidence to conclude that an effect exists at the population level.

If the p-value for a variable is less than your significance level, your sample data provides enough evidence to reject the null hypothesis for the entire population. Your data favours the hypothesis that there is a non-zero correlation. Changes in the independent variable are associated with changes in the dependent variable at the population level. This variable is statistically significant and probably a worthwhile addition to your regression model.

On the other hand, when a p-value in regression is greater than the significance level, it indicates there is insufficient evidence in your sample to conclude that a non-zero correlation exists.

Interpreting Linear Regression Coefficients

The sign of a linear regression coefficient tells you whether there is a positive or negative correlation between each independent variable and the dependent variable. A positive coefficient indicates that as the value of the independent variable increases, the mean of the dependent variable also tends to increase. A negative coefficient suggests that as the independent variable increases, the dependent variable tends to decrease.

The coefficient value signifies how much the mean of the dependent variable changes given a one-unit shift in the independent variable while holding other variables in the model constant. This property of holding the other variables constant is crucial because it allows you to assess the effect of each variable in isolation from the others.

The linear regression coefficients in your statistical output are estimates of the actual population parameters. To obtain unbiased coefficient estimates that have the minimum variance, and to be able to trust the p-values, your model must satisfy the seven classical assumptions of OLS linear regression.

Graphical Representation of Linear Regression Coefficients

A simple way to grasp regression coefficient interpretation is to picture them as linear slopes. The fitted line plot illustrates this by graphing the relationship between a person's height (independent variable) and weight (dependent variable). The numeric output and the graph display information from the same model.

The height coefficient in the regression equation is 106.5. This coefficient represents the mean increase of weight in kilograms for every additional one meter in height. If your height increases by one meter, the average weight increases by 106.5 kilograms.

The regression line on the graph visually displays the same information. If you move to the right along the x-axis by one meter, the line increases by 106.5 kilograms. Keep in mind that it is only safe to interpret regression results within the observation space of your data.

Polynomial Terms to Model Curvature in Linear Models

The previous linear relationship is relatively straightforward to understand. A linear relationship indicates that the change remains the same throughout the regression line. Now, let's move on to interpreting the coefficients for a curvilinear relationship, where the effect depends on your location on the curve. The interpretation of the coefficients for a curvilinear relationship is less intuitive than linear relationships.

In linear regression, you can use polynomial terms to model curves in your data. It is important to keep in mind that we're still using linear regression to model curvature rather than nonlinear regression. That's why curvilinear relationships are referred to in this context rather than nonlinear relationships. Nonlinear has a very specialised meaning in statistics.

Regression Coefficients and Relationships Between Variables

Regression analysis is all about determining how changes in the independent variables are associated with changes in the dependent variable. Coefficients tell you about these changes, and p-values tell you if these coefficients are significantly different from zero.

All of the effects discussed so far have been main effects, which is the direct relationship between an independent variable and a dependent variable. However, sometimes the relationship between an independent variable and a dependent variable changes based on another variable. This condition is an interaction effect.

Interpreting the Intercept

The Y-intercept, or constant, is the predicted value of the dependent variable when all independent variables are zero. In some cases, this may not be meaningful, for example, if it is not possible for an independent variable to be zero. In these cases, the Y-intercept has no real interpretation and is simply used to anchor the regression line in the right place.

Interpreting Coefficients of Continuous Predictor Variables

For continuous predictor variables, the coefficient represents the difference in the predicted value of the dependent variable for each one-unit difference in the independent variable, assuming all other variables in the model remain constant.

Interpreting Coefficients of Categorical Predictor Variables

For categorical predictor variables, the coefficient is interpreted as the difference in the predicted value of the dependent variable for each one-unit difference in the independent variable, assuming all other variables in the model remain constant. However, since categorical variables are often coded as 0 or 1, a one-unit difference represents switching from one category to another.

Interpreting Coefficients when Predictor Variables are Correlated

Each coefficient is influenced by the other variables in a regression model. Because predictor variables are usually associated, two or more variables may explain some of the same variation in the dependent variable.

Therefore, each coefficient does not measure the total effect on the dependent variable of its corresponding variable. It would if it were the only predictor variable in the model, or if the predictors were independent of each other.

Each coefficient represents the additional effect of adding that variable to the model, assuming the effects of all other variables in the model are already accounted for.

Interpreting Other Specific Coefficients

The interpretation of regression coefficients becomes more complex for more complicated models, for example, when the model contains quadratic or interaction terms. There are also ways to rescale predictor variables to make interpretation easier.

T. Rowe Price Employee Investment Requirements: What You Need to Know

You may want to see also

Linear regression vs multiple regression

Linear regression is a statistical technique used to model the relationship between a dependent variable and one or more independent variables. In the context of investments, linear regression is used to predict future prices or values based on historical data.

The Linear Regression Indicator (LRI) is a formula used to calculate the expected price at the end of a specified number of bars. A positive slope indicates an upward trend, while a negative slope indicates a downward trend. The slope's steepness indicates the strength of the trend.

While linear regression is a valuable tool for investors, it has some limitations. It is a lagging indicator, relying on historical data, and may not accurately predict future price movements, especially during volatile market conditions. It also heavily emphasises past data, which may not always be a reliable predictor of future behaviour.

Multiple regression is a broader class of regression analysis, which includes both linear and nonlinear relationships with multiple explanatory variables. It is used to determine the relationship between a dependent variable and multiple independent variables, with each variable having its own coefficient to ensure appropriate weighting.

Multiple linear regression is used when multiple independent variables determine the outcome of a single dependent variable. It is often applied when forecasting more complex relationships.

For example, consider an analyst who wants to establish a relationship between the daily change in a company's stock prices and the daily change in trading volume. Using multiple regression, the analyst can determine the impact of several factors, such as the company's P/E ratio, dividends, and the prevailing inflation rate, on the stock price:

Daily Change in Stock Price = (Coefficient)(Daily Change in Trading Volume) + (Coefficient)(Company's P/E Ratio) + (Coefficient)(Dividend) + (Coefficient)(Inflation Rate)

Multiple linear regression is a more specific and complex calculation than simple linear regression. It is used for more complex relationships that require careful consideration of multiple variables.

In summary, linear regression focuses on the relationship between a dependent variable and a single independent variable, while multiple regression extends this to include multiple independent variables, providing a more comprehensive analysis. Multiple regression is particularly useful when dealing with complex relationships and multiple factors influencing an outcome.

Investing Cash Flow: Exploring Other Items

You may want to see also

Linear regression in machine learning

Linear regression is a fundamental machine learning algorithm that has been widely used for many years due to its simplicity, interpretability, and efficiency. It is a type of supervised machine learning algorithm that learns from labelled datasets and maps the data points to the most optimised linear functions, which can be used for predictions on new datasets.

In an ML context, linear regression finds the relationship between features and a label. For example, if we wanted to predict a car's fuel efficiency in miles per gallon based on how heavy the car is, we could use linear regression to find the best-fit line through the data points. The equation for this would be:

Y' = b + w1x1

Where:

- Y' is the predicted label (output)

- B is the bias of the model (y-intercept)

- W1 is the weight of the feature (slope)

- X1 is a feature (input)

The model calculates the weight and bias that produce the best fit during training.

Linear regression can also be used with multiple features, each having a separate weight. For example:

Y' = b + w1x1 + w2x2 + w3x3 + w4x4 + w5x5

Python has methods for finding a relationship between data points and drawing a line of linear regression. The scipy module can be used to compute the coefficient of correlation, which indicates how well the data fits a linear regression.

The advantages of linear regression include its simplicity, interpretability, efficiency, and robustness to outliers. However, it assumes a linear relationship between the dependent and independent variables, and it is sensitive to multicollinearity. It also requires feature engineering and is susceptible to overfitting and underfitting.

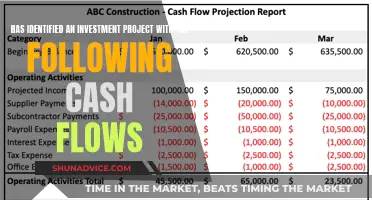

Investing Activities: Do Cash Flows Stay Positive?

You may want to see also

Linear regression applications beyond business

Linear regression has applications beyond business and investments, including:

Market Analysis

Using a regression model, companies can determine how products perform in the market by establishing relationships between several variables, such as social media engagement, pricing, and the number of sales. This information can be used to implement specific marketing strategies, maximise sales, and increase revenue. For example, a simple linear model can be used to understand how price affects sales and evaluate the strength of the relationship between the two variables.

Sports Analysis

Sports analysts can use linear regression to determine a team's or player's performance in a game. They can then use this information to compare teams and players and provide valuable insights to their followers. Additionally, they can predict game attendance based on the status of the teams playing and the market size, allowing them to advise team managers on optimal game venues and ticket prices to maximise profits.

Environmental Health

Specialists in environmental health can use linear regression to evaluate the relationship between natural elements, such as soil, water, and air. For instance, they can study the relationship between the amount of water and plant growth. This helps environmentalists predict the effects of air or water pollution on the environment.

Medicine

Medical researchers can use linear regression to determine the relationship between independent characteristics, such as age and body weight, and dependent ones, such as blood pressure. By doing so, they can identify risk factors associated with diseases, identify high-risk patients, and promote healthy lifestyles.

Enhancing Cash Flow: Investing Strategies for Positive Returns

You may want to see also

Frequently asked questions

Linear regression is a statistical technique used to model the relationship between a dependent variable and one or more independent variables. In the context of investments, linear regression is used to predict future stock prices and make informed trading decisions.

Linear regression assumes a linear relationship between an independent variable (e.g. time) and a dependent variable (e.g. stock prices). By analysing historical data and plotting it on a bell curve, investors can identify when a stock is overbought or oversold and make predictions about future price movements.

Linear regression has some limitations. It assumes a linear relationship between variables, but in reality, the relationship may be nonlinear. It also relies on historical data, so it may not always accurately predict future price movements, especially during periods of high market volatility or when unpredictable factors, such as news flows, come into play.